Daan Zonneveld

Solution Architect

7 May 2025, 5 min

Take control: Local LLMs and the fight for data privacy

Introduction

Local deployments of large language models are gaining serious momentum. As organizations push for more control over their data, infrastructure, and compliance posture, the case for on-premise AI is getting stronger, especially in privacy-sensitive sectors.

We spoke with Daan Zonneveld, Solution Architect at Hypersolid, about the drivers behind this shift, how open-source LLMs have evolved, and how his team built a local proof of concept that allows any organization to deploy their own local LLM setup at no cost, providing an accessible way to explore this shift firsthand.

Interview

Q: A local LLM — what exactly is that?

A local LLM is essentially an AI model you run yourself, either on your laptop, your internal servers, or on infrastructure you manage directly. Instead of relying on public cloud platforms like OpenAI, Google, or Anthropic, you deploy and operate the model locally.

Those big providers host their models in massive GPU datacenters: Meta, Microsoft, the usual players. But now, thanks to the open-source ecosystem, you can take control into your own hands. You might use a physical server in your office, rent GPU capacity from a specialized provider, or even just run a model on a well-equipped laptop.

These aren’t the same models you get from proprietary APIs, but open-weight equivalents that you operate. You decide how the data flows. There’s no third-party operator and no blind trust required. That makes it an appealing option for organizations with strict privacy or compliance requirements.

Daan Zonneveld on going local with LLMs

Q: What’s changed in the last year or so?

The pace has been incredible. In the past 12 to 18 months, open-source models have gotten dramatically better. We’re seeing two trends.

One is the rise of models that run on consumer-grade devices. Think of a laptop with a decent GPU. These models are reaching the quality levels of what OpenAI released as GPT-3 back in 2022. That’s a big deal, especially for individuals or small teams.

The other trend is higher-end open models, which require serious hardware—like 120 to 180 GB of GPU memory—but come remarkably close to the performance of commercial models. These are still open, but powerful enough for more demanding use cases.

And then there’s a new generation of so-called reasoning models. These models loop their own outputs back as inputs, essentially thinking a few steps ahead. Interestingly, the first significant preview of this innovation came from o1, with Deepseek R1 (an open-source model) quickly following with its own implementation. There’s now a visible race between open and proprietary models, which is great for innovation.

Q: Who should be looking into local LLMs?

Anyone working with sensitive data should at least explore it. Personally, if I’m drafting a quick message about something trivial—say, my recycling bin wasn’t emptied—I don’t mind using ChatGPT. I assume the privacy risk is minimal.

But when it comes to client data, financial information, or regulated content, that’s different. Companies like ours, working with sensitive client data, as well as banks and governments, all have data they simply can’t hand over to a black box. Even if a provider claims they won’t use inputs for training and only store them for 30 days for security purposes, there’s no hard guarantee. You’re still taking their word for it.

On top of that, you have geographic and legal differences. A US-based model might fall under different obligations than an EU-hosted one. Chinese providers may face even stricter state-driven data retention rules. So unless you have full clarity on where your data lives and who has access, you’re operating in a legal gray zone—or worse.

Q: Can local models match the flexibility of cloud platforms?

Surprisingly, yes—especially when paired with AI agents. These are bits of software that can take actions based on what the model suggests. Whether the model runs in the cloud or locally, agents can help automate tasks, process inputs, or even control parts of your system.

Daan Zonneveld on the strength of local models

And there’s a broader issue at play: control. Not just over where your data is stored, but how the technology behaves. Look at Cursor—a code assistant that clearly outlines where data goes and how it’s processed. For EU users, that typically means US-based storage. Unless you’ve got scale and negotiating power, you can’t change that. So you either accept it or find tools that respect European values and regulations.

Even behavior matters. OpenAI recently rolled back an update to GPT-4o because users found it too "flirtatious" and "annoying." That shift, driven by short-term feedback, actually damaged trust. It’s a reminder that users need more say over how models behave. That, too, is part of the push for control.

Q: So, is there a clear business case? Can companies improve efficiency with local LLMs?

The biggest advantage isn't raw efficiency. It’s control and customization. That said, there is a strong case to be made for fine-tuning.

Let’s say you’ve got an open-source model trained on general data. As a company, you can fine-tune it with your own internal knowledge: procedures, tone of voice, proprietary terminology. The result is a custom model that reflects how your business works.

This fine-tuning process can also be done with commercial models. OpenAI, for example, lets you host fine-tuned versions of their models. But again, it means handing over your data and relying on their infrastructure. If you want to keep everything local, fine-tuning an open model gives you that option, with full control.

Q: What has Hypersolid been working on in this space?

We developed a proof of concept to explore how to make local models truly accessible.

There’s great software out there—tools like ollama and llama.cpp runtimes—that let you run models on your own hardware. You can even expose those via an API or run a web interface like OpenWebUI through a local Python script. But it’s still fairly technical.

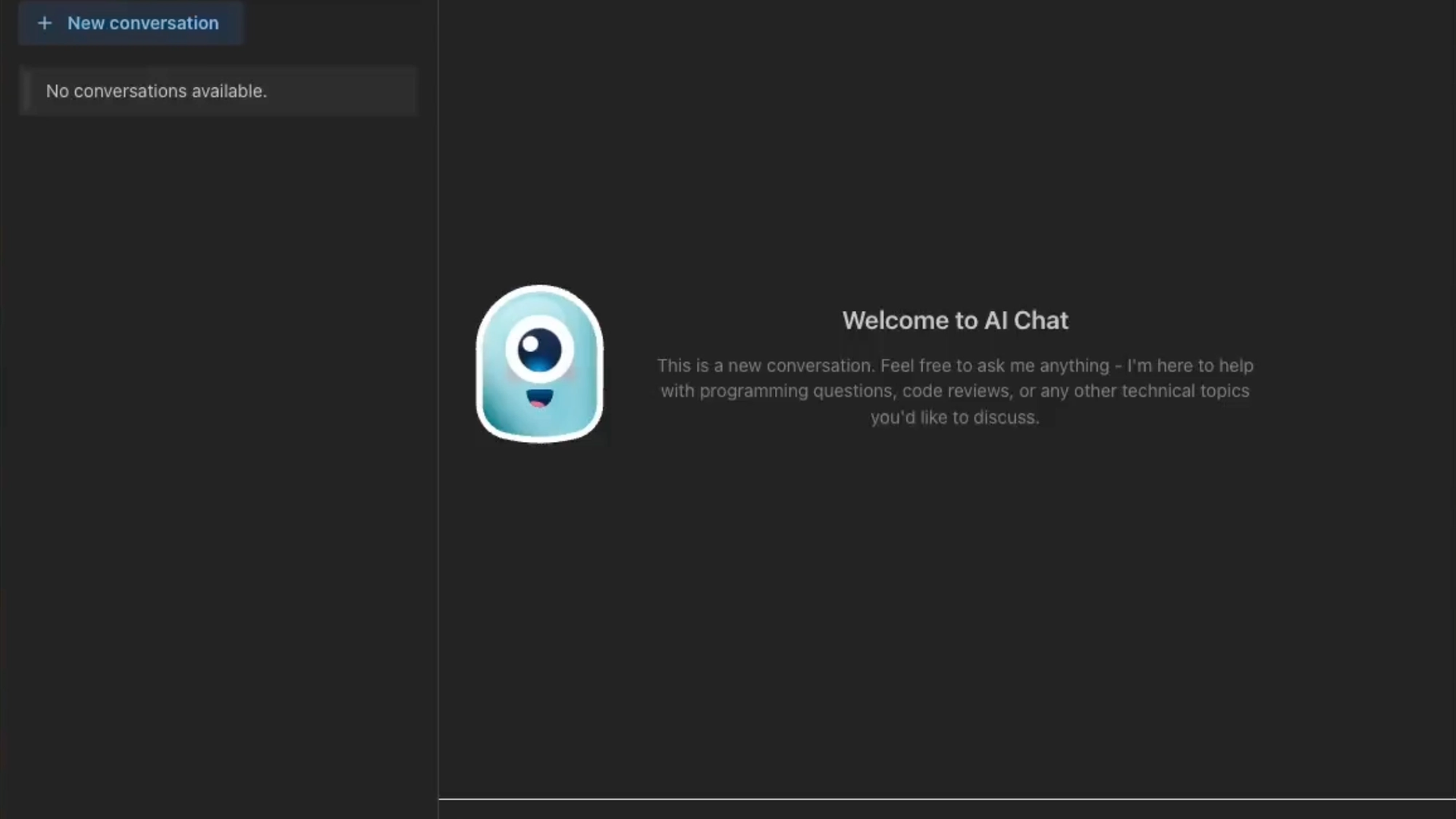

So we took it a step further. We built a desktop app you can install with one click. It handles the setup for you: selecting the right model based on your laptop specs, downloading it, launching the runtime. The user gets a chat interface much like ChatGPT, but running locally.

We also added document support. You can drag in files like PDFs or manuals, and the app builds an index. Think Retrieval-Augmented Generation (RAG). When you ask a question, it looks through those indexed documents, selects the relevant content, and prompts the model with that context.

It’s still early days, but the experience is already intuitive. Install, chat, drop in your files, and go. For local-first AI, that’s a huge step forward.

To explore the proof of concept with no obligation, access it via GitHub.

Contact us and let's get started.

Solid change starts here

Perspectives